REDTOP computing requirements

The REDTOP experiment operates in a typical beam environment where the inelastic interaction rate of the proton beam with the target is ~1GHz. Level-0 and Level-1, implemented in hardware, will reduce such a large rate by a factor of ~104 before sending the data to a compute-farm for the Level-2 trigger and preliminary reconstruction.

In terms of storage requirements, the experiment is expected to generate approximately 2.5 PB of production data and approximately 2 PB of processed data each year. It is proposed that Fermilab houses the production data on tape storage each year of operation and provides an allocation on dCache for staging data. In addition, the collaboration can leverage OSG Connect which provides ephemeral storage to stage data for grid jobs.

In terms of compute requirements, REDTOP’s single-core computational workflow has proven to be well suited for the Distributed High Throughput Computing (DHTC) environment of the OSG. The proposed computing scheme here aims to accommodate the dataflow from the full experimental apparatus. Extrapolating from available data, from REDTOP jobs currently running on OSG, it is estimated that the collaboration would need approximately 90 million core hours annually; 55 million core-hours for Monte Carlo jobs and 35 million core-hours for data reconstruction jobs.

The computing model

With the above considerations in mind, we assume that the output DataStream from the Level-2 farm will be staged at Fermilab’s (FNAL) dCache storage and, eventually, preprocessed on site. The process will require an allocation on the General Purpose Grid (GPGrid). Local access to the files in dCache is enabled via a POSIX-like interface over an NFS mount. In the present contest, dCache will serve as a high speed front-end ephemeral storage to provide access to FNAL’s tape system. Since direct access to tape is limited to on-site machines, staging to dCache first is required for off-site access. In order to increase the flexibility of the model and to offer more optimal implementations to the participating institutions, several options are discussed.

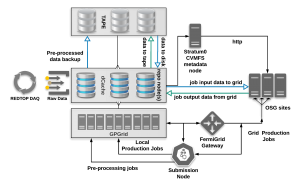

The collaboration plans to process the bulk of the experimental results with jobs submitted from FermiGrid or OSG Connect submission nodes. An example of a job submission for REDTOP from FNAL is shown Figure 1. A submission node sends jobs to the grid while data are delivered to grid jobs by first staging them on dCache and then transporting them to the remote sites via a common protocol such as GridFTP. It is proposed for Fermilab maintain an origin (stratum-0) repository for REDTOP data in the distributed CernVM File System (CVMFS) which will then deliver data and software to remote compute sites over the HTTP protocol. Reconstruction or analysis data designated for long term storage can then be archived back to tape at FNAL by first transferring them back onto the dCache storage.

The collaboration will continue to use the OSG Connect submission nodes to launch jobs to the OSG. OSG Connect provides a collaborative environment for a multi-institutional collaboration like REDTOP in order to have access to a single point of submission to the OSG grid. Researchers from the individual institutions, not necessarily affiliated with the laboratory hosting the experiment, can get an account on OSG Connect for job submission and access to transient storage space. A dedicated submission node, independent from the OSG Connect servers, can also be provisioned for the exclusive use of the collaboration if required and funds are available.

Figure 1: REDTOP workflow at Fermilab.

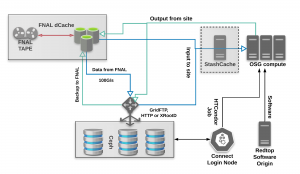

Collaborative shared storage for OSG Connect is provided by Stash, a Ceph storage system. Stash provides capacity for temporary storage for OSG projects. During production, REDTOP will make use of Stash to store the processed output from the jobs before distributing them to various endpoints of the collaboration. Processed data can also be stored back to tape at FNAL. Figure [2] shows such an example of a data processing workflow using OSG Connect. Users will be able to submit jobs to the grid from the login server. A Stratum-0 server hosts a CVMFS repository of the REDTOP software stack, based on the GenieHad[2] and Geant4[3] Monte Carlo software as well as the reconstruction framework. The server’s role is to distribute the collaboration’s software stack and tools to the remote sites where the job is running. The CVMFS software repo can alternatively be hosted and managed by the OSG Application Installation Service (OASIS).

Production quality raw data will need to be distributed to the grid site running the job via GridFTP from FNAL’s dCache as in Figure 1 or directly from OSG Connect Stash if a copy of the input data is staged there. Due to the expected decline in the use of GridFTP – Globus support has ended – alternative methods to transfer data include the XrootD[4] and WebDAV/HTTPS protocols. Alternatively, raw data could also be served to the compute site from the data origin at FNAL using OSG’s StashCache infrastructure. StashCache[5] is the OSG data caching service that stores data needed by a project in proximity to the compute sites. Requesting data from the nearest StashCache instead of the Origin minimizes the flight path to the worker node at the remote site.

Figure 2: REDTOP workflow using OSG Connect.

While not in the critical path to the processing campaign of the experimental data, the REDTOP collaboration will pursue the decentralization of job submissions to the OSG by having member institutions provision and deploy their own submission hosts. Furthermore and contingent upon the availability of local computing resources, member institutions can join the OSG federation and accept jobs from OSG’s GlideinWMS[6] job factory via a HostedCE[7,8] deployment.